Will Multimodal Be the Future of AI?

Artificial intelligence has experienced tremendous advances and change over the past year. ChatGPT, GPT-4, Midjourney, DALL-E, Stable Diffusion and a growing number of new generative-AI tools are just a few of the recent developments.

One feature that all of these share is that they’re either built on or are themselves LLMs or Large Language Models. Having been first developed only six years ago, this approach has since taken the world by storm.

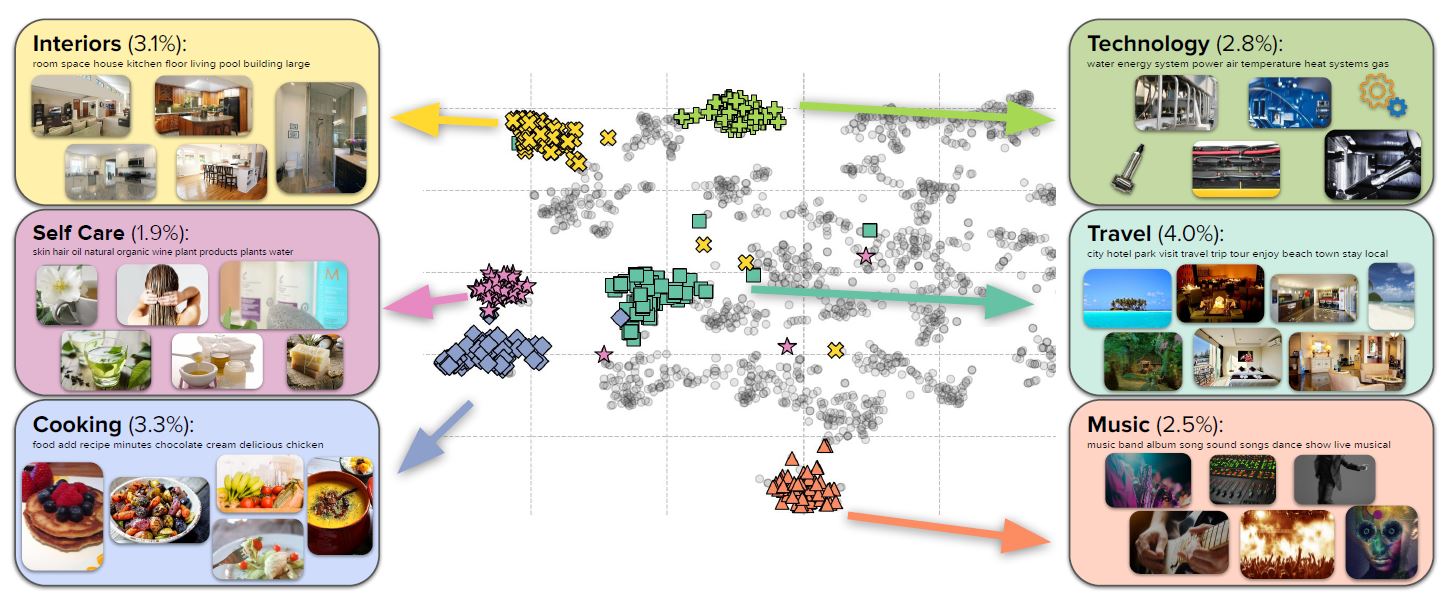

Now, new approaches to this concept are being developed and tested. As described in my recent Geekwire article, rather than just relying on text, researchers at the Allen Institute for AI are now looking into the benefits and improvements that can be achieved by applying a multimodal approach.Interleaving relevant images with the text of an enormous billion-scale corpus, the team is able to achieve fewer-shot learning, in which the system is capable of inferring and learning from a limited set of examples.

Similar to how we ourselves gather information from the world using our various senses, a multimodal AI approach draws from images, video, captions and so forth in order to broaden its view, so to speak. The resulting technique yields performance improvements well beyond the abilities of earlier text-only approaches.

This research comes from the Allen Institute for AI’s (AI2) MOSAIC group, which is dedicated to advancing machine commonsense. Another recent MOSAIC project, MERLOT RESERVE also applied a multimodal approach that enabled out-of-the-box prediction and strong multimodal commonsense understanding.

I have no doubt that multimodal approaches will become a critical innovation, rapidly leading to still more powerful and useful AI tools and systems.

Read more about this:

AI2 researchers release new multimodal approach to boost AI capabilities using images and audio

New AI model shows how machines can learn from vision, language and sound together